- Get the code out of source control fresh.

- Does it build? Yes then continue, No then stop the code review.

- Run the unit tests.

- Do they run and all pass? Yes then continue, No then stop the code review.

- Check the unit test code coverage.

- Is the coverage around >60%? Yes then continue, No then stop the code review unless there is a good excuse for the coverage that the review team are happy with.

- Check the code metrics (Cyclomatic Complexity and Maintainability Index)

- Are the metrics within agreed boundaries? Yes then continue, No then stop the code review.

- Run the static code analysis against the agreed rule set?

- Are there any warnings / errors? Yes then stop the code review, No then continue.

- Once you get to this point, the development practices have been followed and you can proceed to review the actual code.

Unit Test Coverage

Whilst you are developing your software you should be writing tests to exercise that code. Whether you practice test driven development and write your tests first or write tests after the fact, you need a decent level of test coverage. This gives you a level of confidence that the code you are writing does what you expect it too. Also, it gives you a safety blanket when you need to refactor your code. If you make a change in one area, does it break something somewhere else? Unit tests should give you that answer.

The screen shot below, shows the Test Explorer view in Visual Studio 2012. From this view you can run all of your unit tests. As of Visual Studio 2012 Update 1, you can group you tests based on pass outcome, length of execution and project. Think of this view as your project health dashboard. If you have a good level of coverage and they are all green, then you can carry on developing. If you have any red tests then you need to work out why and fix them. Don’t let this view lead you into a false sense of security though. You still need to write tests to a decent level of coverage and ensure you are testing the right things.

You can check your test coverage very easily in Visual Studio. First you can click the little drop down ‘Run’ menu in the Test Explorer, or you can open the ‘Test’ menu in Visual Studio, and then open up the ‘Analyse Test Coverage’ and select ‘All Tests’. This will give you a view similar to below.

In the screen shot above you can see that the project overall has a coverage of 73%. This is a good number. It really is not worth chasing 100% as you end up testing things that just don’t need to be tests, but 60 – 70% is a much more realistic goal. In the example above you can see each assembly in the project where you can drill down into more detail to look at class and method coverage. The key metric here is the ‘Covered % Blocks’ column on the right. It makes sense to routinely check this view so you can keep an eye on your overall coverage. If anything creeps below 60%, then you can take a look. Sometimes you may feel that the area in question doesn’t need extra tests, but you can only make that call when you see the stats in front of your eyes. Purists will argue you need to cover every last inch of code in tests, but we live in the real world and need to be more pragmatic about it.

If you do have any area of code where test coverage has slipped, then Visual Studio makes it very easy to find these areas.

In the code coverage window, click the highlighted button above (Show Code Coverage Coloring), and then double click on a method that has low coverage, you will be taken to the source code file and any uncovered blocks will be highlighted in red. In the example below, there are 3 items that are uncovered. The first and third examples are where an exception is being thrown based on a null argument check; I would add tests in for those. The middle example just has a public property returning a string. In my view there is no point adding a test for this as you are just testing that the language works at that point.

Code Metrics

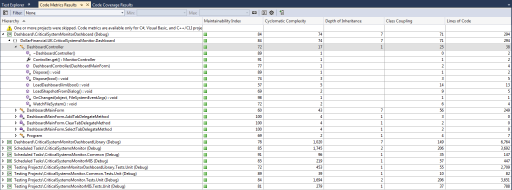

Unit test coverage is only one part of determining the health of your code base. You can have high test coverage and still have code that is tangled, hard to read and maintain. Visual Studio provides tools to help you, at a glance, look for smells with the structure of your code. To access this view, open the ‘Analyse’ menu in visual studio and select ‘Calculate Code Metrics for Solution’. This will give you a view like below.

|

|

Maintainability Index

|

Cyclomatic Complexity

|

Class Coupling

|

Depth of Inheritance

|

|

Green

|

> 60

|

< 10

|

< 20

|

< 5

|

|

Yellow

|

40 - 60

|

10 - 15

|

|

|

|

Red

|

< 40

|

> 15

|

> 20

|

Maintainablity Index: The Maintainability Index calculates an index value between 0 and 100 that represents the relative ease of maintaining the code. A high value means better maintainability. Color coded ratings can be used to quickly identify trouble spots in your code. A green rating is between 20 and 100 and indicates that the code has good maintainability. A yellow rating is between 10 and 19 and indicates that the code is moderately maintainable. A red rating is a rating between 0 and 9 and indicates low maintainability.

Cyclomatic Complexity: Cyclomatic complexity (or conditional complexity) is a software measurement metric that is used to indicate the complexity of a program. It directly measures the number of linearly independent paths through a program’s source code. Cyclomatic complexity may also be applied to individual functions, modules, methods or classes within a program. A higher number is bad. I generally direct my team to keep this value below 7. If the number creeps up higher it means your method is starting to get complex and could do with re-factoring generally by extracting code into separate, well named methods. This will also increase the readability of your code.

Depth of Inheritance: Depth of inheritance, also called depth of inheritance tree (DIT), is defined as “the maximum length from the node to the root of the tree”. A low number for depth implies less complexity but also the possibility of less code reuse through inheritance. High values for DIT mean the potential for errors is also high, low values reduce the potential for errors. High values for DIT indicate a greater potential for code reuse through inheritance, low values suggest less code reuse though inheritance to leverage. Due to lack of sufficient data, there is no currently accepted standard for DIT values. I find keeping this value below 5 is a good measure.

Class Coupling: Class coupling is a measure of how many classes a single class uses. A high number is bad and a low number is generally good with this metric. Class coupling has been shown to be an accurate predictor of software failure and recent studies have shown that an upper-limit value of 9 is the most efficient.

Lines of Code (LOC): Indicates the approximate number of lines in the code. The count is based on the IL code and is therefore not the exact number of lines in the source code file. A very high count might indicate that a type or method is trying to do too much work and should be split up. It might also indicate that the type or method might be hard to maintain.

Based on the metric descriptions above, you can use the code metrics view to drill down into your code and get a very quick view of areas in your code that start to break these guidelines. You can very quickly start to highlight smells in your code and responds to them sooner rather than later. I routinely stop coding and spend 30 minutes or so going through my code metrics to see if I have gone astray. These metrics will help to keep your code honest.

Static Code Analysis

The final code quality tool I want to discuss is that of static code analysis. This has been around for Visual Studio for quite a while now, and used to be called FXCop, but this is now directly integrated into visual studio. Static code analysis runs a set of rules over your code to look for common pitfalls and problems that arise from day to day development. You can change the rule set to turn on/off rules that are relevant to you. You can also change the sensitivity of the rule. For example do you want it to produce a compiler warning, or actually break the build?

If you have a large code base and are thinking of introducing static code analysis, I recommend starting off setting the ‘Microsoft Managed Minimum Rule set’ and getting all those passing first. If you try to jump straight into the ‘Microsoft All Rules’ rules set you will quickly become swamped and then most likely turn off the code analysis.

Adding code analysis to your solution is easy. It is managed at the project level. Right click on the project in the solution explorer and select ‘Properties’. When the properties window appears, select the ‘Code Analysis’ tab as in the screen shot below.

First you should select the ‘Enable Code Analysis on Build’ check box. This will make sure the rules are run every time you build your code. This forces you to see the issues with your code every time you build instead of relying on yourself to manually check.

In the drop down box you can select which rule set to use. Generally the rule sets provided by Microsoft are enough to work with. What we found was that some rules were not as relevant to us, so I created a custom rules set. You can see where I selected this above. The rule set is called ‘DFGUK2012’.

You can add your own rule set easily. In your solution, right click and select ‘Add New Item’. When the dialog box appears, select ‘Code Analysis Rule Set’, as shown below.

Then in your ‘project properties’ rule set drop down box, instead of selecting one of the Microsoft rule sets, select ‘<Browse>’, and then browse to your custom rule set. If you double click on the rule set added to your solution, you will be shown the rule set editor, as shown below.

From here you can enable/disable rules to suit your project and team. You can also select whether you want a broken rule to show as a compiler warning or error.

No comments:

Post a Comment